Modern Computer Architecture and Organization

- (How Data Moves Through A Satellite Network - GroundControl)

- Overview

Computer organization and architecture (COA) are two interconnected fields in computer science that deal with the design and structure of computer systems.

Computer architecture focuses on the abstract functional behavior of a system, including the instruction set, addressing modes, and data types, while computer organization delves into the physical implementation of those aspects, such as hardware components, control signals, and interfaces.

Computer organization realizes the architecture. Architecture provides the specification, and organization implements that specification. Understanding both is crucial for building efficient and effective computer systems.

- Performance Optimization: Understanding how hardware and software interact allows for writing more efficient code and optimizing system performance.

- System Design: Knowledge of COA is essential for designing new computer systems or improving existing ones.

- Problem Solving: COA helps in troubleshooting and debugging hardware and software issues.

- Computer Architecture vs Computer Organization

Computer organization and architecture (COA) are distinct but related aspects of computer system design. Architecture focuses on the abstract functionality and design of a computer system, while organization deals with the physical implementation of that design.

Architecture defines what a computer does (e.g., instruction set, data types), while organization describes how it does it (e.g., how components are interconnected, how data flows).

In essence, computer architecture is the blueprint, while computer organization is the construction based on that blueprint.

Computer Architecture:

- Definition: The functional design and structure of a computer system, specifying what the computer does at a high level.

- Focus: Instruction set, data paths, control units, memory organization, and addressing modes.

- Goal: To define the computer's capabilities and how it interacts with software.

- Example: Deciding whether to use RISC or CISC architecture, defining the number of registers, or specifying the instruction set.

- Development: Developed before organization during the system design process.

Computer Organization:

- Definition: The physical implementation of the architectural design, specifying how the components are interconnected and how they operate together.

- Focus: Hardware details, control signals, and the physical connections between components.

- Goal: To realize the architectural design in a functional, physical system.

- Example: Connecting the CPU, memory, and input/output devices, designing the control unit, and specifying the data paths.

- Development: Follows the architectural design.

- Why Study Computer Organization and Architecture?

Studying computer organization and architecture (COA) is crucial for understanding how computers work at a fundamental level, which is beneficial for both software and hardware developers, as well as for anyone who wants to understand the capabilities and limitations of the systems they use.

This knowledge helps in designing better hardware, optimizing software performance, and making informed decisions when selecting or building computer systems according to a Computer Science educational site.

In essence, computer organization and architecture provides a bridge between the abstract world of software and the physical reality of hardware, enabling us to build better computers, write more efficient software, and make more informed decisions about the technology we use.

For Software Developers:

- Optimizing Software Performance: Understanding how the hardware works allows programmers to write more efficient code. For example, knowing how caches work can help optimize memory access patterns to reduce latency.

- Debugging and Troubleshooting: When code behaves unexpectedly, understanding the underlying hardware can help pinpoint the cause of the issue.

- Choosing the Right Tools and Technologies: Knowing the capabilities and limitations of different hardware components can help in selecting the appropriate programming languages, libraries, and tools for a specific task.

- Understanding Data Structures and Algorithms: Concepts like memory organization (stack, heap), data representation, and the impact of hardware on algorithm performance are all tied to computer organization.

For Hardware Designers:

- Designing Efficient Systems: Understanding the principles of computer organization allows for designing processors, memory systems, and other components that are optimized for performance and power efficiency.

- Making Trade-offs: Hardware designers constantly need to make trade-offs between different design choices. Understanding the implications of these choices (e.g., speed vs. power consumption) is crucial.

- Developing New Architectures: Knowledge of computer organization is essential for pushing the boundaries of computer design and developing new types of computing systems.

For Everyone Else:

- Informed Consumer Decisions: Understanding the specifications of different computer systems allows for making informed decisions when purchasing a new computer or other devices.

- Appreciating the Complexity of Computing: Studying computer organization provides a deeper appreciation for the intricate processes that occur within a computer system.

- Understanding the Limits of Technology: Knowing the fundamental limitations of computer systems (e.g., the speed of light, the von Neumann bottleneck) can help set realistic expectations about what computers can achieve.

- Computer Architecture

Computer architecture is the functional design and structure of a computer system, defining how its hardware components work together to execute instructions and process data.

It encompasses the instruction set architecture (ISA), data paths, and control units, all of which are crucial for optimizing performance and functionality. Essentially, it's the blueprint for building a computer system, detailing how its components are organized and how they interact.

Key Aspects of Computer Architecture:

- Instruction Set Architecture (ISA): This defines the set of instructions that a processor can understand and execute. It also includes the data types, registers, and addressing modes used by the processor.

- Data Paths: These are the pathways through which data flows within the computer system, connecting different components like the CPU, memory, and I/O devices.

- Control Unit: This unit fetches instructions from memory, decodes them, and generates control signals to other components to execute the instructions.

- Performance, Efficiency, Cost, and Reliability: Computer architecture aims to balance these factors to create a well-functioning and cost-effective system.

- Hardware and Software Interaction: Computer architecture also considers how software interacts with the hardware, including the operating system and programming languages.

Computer architecture encompasses various computing paradigms, including processor-centric, memory-centric, data-centric, and net-centric computing. These approaches differ in how they prioritize and optimize the placement and processing of data within a computer system.

These paradigms are not mutually exclusive, and many systems combine elements of each to achieve optimal performance and efficiency. For example, memory-centric techniques can be integrated into data-centric architectures to further enhance performance.

- Processor-centric computing: This is the traditional approach where the processor is the central focus, and data is moved to the processor for computation.

- Memory-centric computing: This paradigm prioritizes optimizing computations within or near the memory system, aiming to reduce data movement between memory and the processor. This can lead to significant performance and energy efficiency gains, especially for memory-bound workloads.

- Data-centric computing: This approach focuses on placing computations near the data to minimize data movement. It involves storing data independently of applications and upgrading applications without costly data migration.

- Net-centric computing: This paradigm, also known as distributed or cloud computing, leverages networks to connect and distribute computing resources, often across multiple machines or locations.

- Processor-centric Computing

Processor-centric computing is a traditional computing model where the central processing unit (CPU) is the primary component for all data processing tasks.

In this model, data is moved from storage and memory to the CPU for computation, which can lead to bottlenecks and inefficiencies due to the speed disparity between processors and memory.

In essence, processor-centric computing is a foundational model that has limitations in handling large datasets and complex computations efficiently. Memory-centric and data-centric approaches are emerging as alternatives to address these limitations.

Key Characteristics of Processor-centric Computing:

- CPU as the Processing Hub: The CPU is the core component responsible for executing instructions and performing calculations.

- Data Movement: Data is fetched from memory and storage to the CPU for processing.

- Memory as a Slave: Memory is primarily used to store data and instructions for the CPU, with limited processing capabilities.

- Processor-Memory Bottleneck: The speed difference between processors and memory can create a bottleneck, leading to performance limitations and energy waste.

Limitations of Processor-centric Computing:

- Performance Limitations: The need to move data to the CPU for processing can significantly slow down overall system performance, especially for memory-intensive tasks.

- Energy Inefficiency: Data movement consumes a considerable amount of energy, making processor-centric systems less energy-efficient.

- Scalability Issues: As the amount of data and the complexity of computations increase, processor-centric systems can face challenges in scaling performance and efficiency.

Alternatives to Processor-centric Computing:

- Memory-centric Computing: This approach aims to move computation closer to the data, either within memory or storage, to reduce data movement and improve performance.

- Data-centric Computing: This paradigm focuses on optimizing data access and processing, potentially involving specialized hardware and software architectures.

- Net-centric Computing: This approach involves distributing applications and data across a network, enabling access on an as-needed basis.

- Memory-centric Computing

Memory-centric computing is a paradigm shift in how computers process information, moving away from the traditional von Neumann architecture where computation is separate from data storage.

Instead, it focuses on enabling computation both within and near where data is stored, like in memory chips or controllers. This approach aims to minimize the energy and performance costs associated with constantly moving data between memory and processors.

Key Concepts:

- Processing-in-Memory (PIM): This involves performing computations directly within the memory chips themselves, leveraging the inherent properties of memory structures.

- Processing-near-Memory (PNM): This involves placing processing units closer to memory, such as in memory controllers or the logic layers of 3D-stacked memory.

Benefits of Memory-Centric Computing:

- Reduced Data Movement: By performing computations closer to or within memory, the need to constantly move data between memory and processors is minimized, leading to significant energy savings and performance improvements.

- Lower Latency: Accessing data and performing computations within or near memory reduces the latency associated with data transfer, resulting in faster processing speeds.

- Increased Parallelism: Memory arrays can be leveraged for massively parallel operations, enabling faster processing of large datasets.

- Improved Energy Efficiency: Reduced data movement translates to lower energy consumption, making memory-centric computing particularly attractive for energy-constrained devices and large-scale data centers.

- Addressing Memory Bottlenecks: Modern computing systems often face bottlenecks due to the limitations of traditional memory architectures. Memory-centric computing offers a way to overcome these bottlenecks by enabling more efficient data processing.

Examples:

- Processing-in-DRAM: Research is exploring how to modify DRAM chips to enable computation within them, potentially unlocking significant performance and energy benefits for memory-bound applications like machine learning.

- Memory-centric architectures for cloud computing: Memory-centric approaches are being explored to improve the efficiency of memory sharing and resource allocation in cloud environments.

- Applications in AI and Machine Learning: Memory-centric computing has the potential to accelerate computationally intensive AI and machine learning workloads by performing operations directly on the data stored in memory.

- Data-centric Computing

Data-centric computing is an approach that prioritizes data as the core asset in computing systems, shifting the focus from applications to the data itself. This paradigm involves rethinking hardware and software to optimize data storage, processing, and movement, ultimately aiming to extract maximum value from data. It contrasts with traditional application-centric models where applications are central and data is secondary.

In essence, data-centric computing is a shift in focus from computation to data, aiming to create more efficient, scalable, and agile systems by optimizing how data is stored, accessed, and processed.

Key Concepts:

- Data as the Primary Asset: In data-centric computing, data is treated as the most valuable and persistent element. Applications are designed to interact with and leverage this data, rather than the other way around.

- Rethinking Hardware and Software: This approach necessitates revisiting traditional computing architectures to optimize for data movement, storage, and processing.

- Computational Storage and Near-Data Processing: One key aspect involves bringing computation closer to data storage (computational storage) or using specialized hardware (near-data processing) to minimize data transfer and improve efficiency.

- Data-Aware Systems: Data-centric systems are designed to understand, classify, and manage data effectively, moving beyond simple storage to become "data-aware".

Why is Data-Centric Computing Important?

- Efficiency: By optimizing data movement and processing, data-centric computing can significantly improve efficiency, especially in scenarios dealing with large datasets and high data volume.

- Scalability: This approach can lead to more scalable systems, as data can be processed closer to its source or in parallel across distributed storage locations.

- Agility: Data-centric architectures can be more agile, allowing for faster updates and modifications to applications without impacting the underlying data.

- Cost Savings: Reduced data transfer and optimized processing can lead to lower energy consumption and overall cost savings in data centers.

Examples of Data-Centric Approaches:

- Computational Storage: Integrating computation directly into storage devices (e.g., SSDs, persistent memory) to process data closer to where it is stored.

- Near-Data Processing: Positioning processing units near memory or storage to reduce data movement bottlenecks.

- Data Processing Units (DPUs): Specialized network processors designed for data-centric tasks, such as offloading data processing from CPUs.

- Net-centric Computing (NCC)

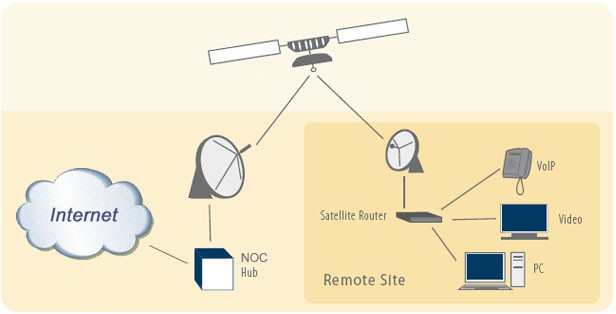

Net-centric computing (NCC) is a computing paradigm where applications and data are distributed across a network, accessible on demand, and shared between multiple devices and users. It's characterized by interconnected systems using standard communication protocols, moving away from traditional, isolated computing models. NCC is a key enabler for modern distributed systems like web applications and cloud computing.

In essence, net-centric computing represents a shift from standalone computing to a more connected and collaborative computing environment, where resources are readily available and shared across a network.

Key Concepts:

- Distributed Environment: NCC relies on a network of interconnected devices, where applications and data are not confined to a single machine.

- On-Demand Access: Users and applications can access resources (applications, data, services) as needed, rather than having them pre-installed or pre-configured on a specific device.

- Standard Protocols: NCC leverages standard communication protocols like TCP/IP and web-based protocols (HTTP, HTTPS) for seamless interaction between devices and systems.

- Dynamic and Scalable: NCC systems can adapt to changing demands by adding or removing resources as needed, making them highly scalable.

- Integration: NCC integrates various IT resources (servers, storage, etc.) into centralized repositories that are accessed through the network.

Examples:

- Cloud Computing: NCC is a core principle of cloud computing, where users access software, platforms, and infrastructure resources over the internet.

- Web Applications: Web applications are accessed through a web browser and rely on a network of servers to provide functionality.

- Embedded Systems: Modern embedded systems are increasingly connected to networks, requiring them to operate within a net-centric environment.

Benefits:

- Increased Flexibility and Scalability: NCC allows organizations to easily scale their IT infrastructure up or down based on their needs, without being limited by hardware or software constraints.

- Reduced Costs: By leveraging shared resources and on-demand access, NCC can help reduce infrastructure costs.

- Improved Collaboration and Efficiency: NCC facilitates collaboration and information sharing between users and systems.

- Enhanced Accessibility: Users can access applications and data from anywhere with an internet connection.

[More to come ...]