Foundations of Neural Networks

- Overview

Artificial neural networks (ANNs) or connectionist systems are computing systems inspired by the biological neural networks that make up animal brains. Such systems learn (gradually improve their abilities) to complete tasks by considering examples, often without being programmed for a specific task.

For example, in image recognition, they might learn to recognize images containing cats by analyzing example images manually labeled "cat" or "no cat" and using the results of the analysis to identify cats in other images. They found that most applications were difficult to express using traditional computer algorithms based on rule-based programming.

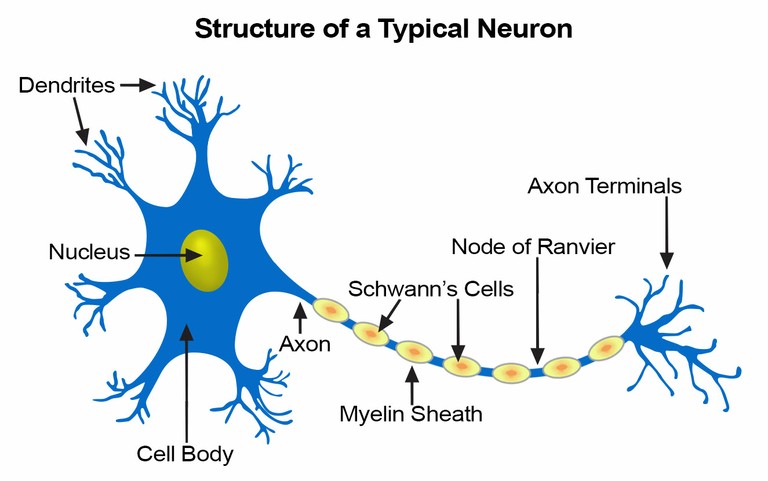

ANNs are based on collections of connected units called artificial neurons (similar to biological neurons in biological brains). Each connection (synapse) between neurons can send a signal to another neuron. Receiving (post-synaptic) neurons process signals and then send signals to downstream neurons to which they are connected. Neurons may have states, usually represented by real numbers, usually between 0 and 1..

Normally, neurons are organized in layers. Different layers can perform different types of transformations on their inputs. The signal may travel from the first layer (input) to the last layer (output) after traversing the layers multiple times.

The original goal of the neural network approach was to solve problems in the same way as the human brain. Over time, attention has focused on matching specific mental abilities, leading to deviations from biology such as backpropagation, or passing a message in the opposite direction and adjusting the network to reflect that message.

Neural networks have been used for a variety of tasks, including computer vision, speech recognition, machine translation, social network filtering, board and video games, and medical diagnosis.

As of 2017, neural networks typically have thousands to millions of units and millions of connections. Although this number is orders of magnitude smaller than the number of neurons in the human brain, these networks can perform many tasks beyond the human level (for example, recognizing faces or playing Go).

- Neural Networks in Clustering and Classification

A neural network is a set of algorithms, loosely modeled after the human brain, designed to recognize patterns. They interpret sensory data through a machine that senses, labels, or clusters raw input. The patterns they recognize are digital and contained in vectors, and all real-world data, whether images, sounds, text or time series, must be converted into vectors.

Neural networks help us cluster and classify. You can think of them as a clustering and classification layer on top of the data you store and manage. They assist in grouping unlabeled data based on similarities between example inputs and classifying data when there is a labeled data set to train on. Classification is a form of supervised learning, while clustering is a form of unsupervised learning.

Neural networks can also extract features that are fed into other algorithms for clustering and classification; so you can think of deep neural networks as components of larger ML applications involving reinforcement learning, classification, and regression algorithms.

Artificial neural networks are the basis of the large language models (LLMS) used by chatGPT, Microsoft's Bing, Google's Bard, and Meta's Llama.

- Three-Layered of Neural Network

The brain consists of hundreds of billion of cells called neurons. These neurons are connected together by synapses which are nothing but the connections across which a neuron can send an impulse to another neuron.

When a neuron sends an excitatory signal to another neuron, then this signal will be added to all of the other inputs of that neuron. If it exceeds a given threshold then it will cause the target neuron to fire an action signal forward — this is how the thinking process works internally.

An ANN can be considered as a classification and as a forecasting technique. This technique tries to simulate how the human brain works. In this technique, there are three layers, Input, Hidden, and Output.

The input layer is mapped to the input attributes. For example, age, gender, number of children can be the inputs to the Input layer. The Hidden layer is an intermediate layer where every input with weightage is received to each node in the hidden layer. The Output layer is mapped to the predicted attributes.

A neuron is a basic unit that combines multiple inputs and a single output. Combinations of inputs are done with different techniques, and the Microsoft Neural Network uses Weighted Sum. Maximum, Average, logical AND, logical OR are the other techniques used by the different implementation.

After these inputs are calculated, then the activation function is used. In theory, sometimes, small input will have a large output, and on the other hand, large input might be insignificant to the output.

Therefore, typically non-linear functions are used for activation. In Microsoft Neural Network uses tanh as the hidden layer activation function and sigmoid function for the output layer.

- The Main Objective of A Neural Network

The main objective of a neural network is to learn by automatically modifying itself so that it can perform complex tasks. Neural networks can be used to model complex relationships between inputs and outputs or to find patterns in data.

Neural networks are intricate networks of interconnected nodes, or neurons, that collaborate to tackle complicated problems. They can be written as compositions of elementary functions, typically affine transformations and nonlinear activation functions. The cost function's purpose is to calculate the error we get from our prediction. The smaller the output of the cost function, the closer the predicted value is to the actual value.

It is a type of ML process, called deep learning, that uses interconnected nodes or neurons in a layered structure that resembles the human brain. It creates an adaptive system that computers use to learn from their mistakes and improve continuously.

- Deep Neural Networks

A deep neural network (DNN) is an artificial neural network with multiple layers between the input and output layers.There are different types of neural networks but they always consist of the same components: neurons, synapses, weights, biases, and functions. These components as a whole function in a way that mimics functions of the human brain, and can be trained like any other ML algorithm.

For example, a DNN that is trained to recognize dog breeds will go over the given image and calculate the probability that the dog in the image is a certain breed. The user can review the results and select which probabilities the network should display (above a certain threshold, etc.) and return the proposed label.

Each mathematical manipulation as such is considered a layer, and complex DNN have many layers, hence the name "deep" neural networks. A shallow neural network, also known as a single-layer neural network, has one or few hidden layers between its input and output layers. In a neural network, the input layer receives data, the hidden layers process it, and the final layer produces the output.

- DNN Architectures

DNNs can model complex non-linear relationships. DNN architectures generate compositional models where the object is expressed as a layered composition of primitives. The extra layers enable composition of features from lower layers, potentially modeling complex data with fewer units than a similarly performing shallow network. For instance, it was proved that sparse multivariate polynomials are exponentially easier to approximate with DNNs than with shallow networks.

Deep architectures include many variants of a few basic approaches. Each architecture has found success in specific domains. It is not always possible to compare the performance of multiple architectures, unless they have been evaluated on the same data sets.

DNNs are typically feedforward networks in which data flows from the input layer to the output layer without looping back. At first, the DNN creates a map of virtual neurons and assigns random numerical values, or "weights", to connections between them. The weights and inputs are multiplied and return an output between 0 and 1.

If the network did not accurately recognize a particular pattern, an algorithm would adjust the weights. That way the algorithm can make certain parameters more influential, until it determines the correct mathematical manipulation to fully process the data.

The three most common types of neural networks used in artificial intelligence (AI) are:

- Feedforward neural networks: Process data in one direction, from the input node to the output node.

- Recurrent neural networks (RNNs): A more complex type of neural network that takes the output of a processing node and transmits the information back into the network.

- Convolutional neural networks: One of the three most commonly used types of neural networks in artificial intelligence.